A thesis submitted in partial fulfilment of the requirements for the degree of Master in Business Information Technology (BIT), University of Twente. March 10, 2005.

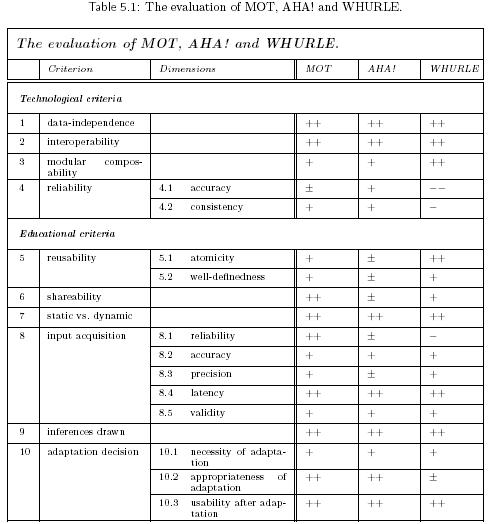

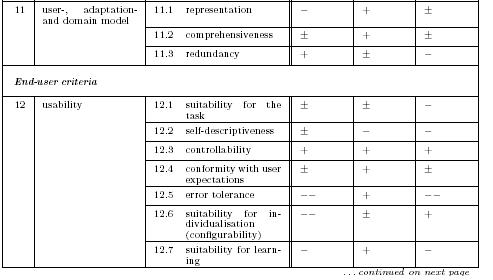

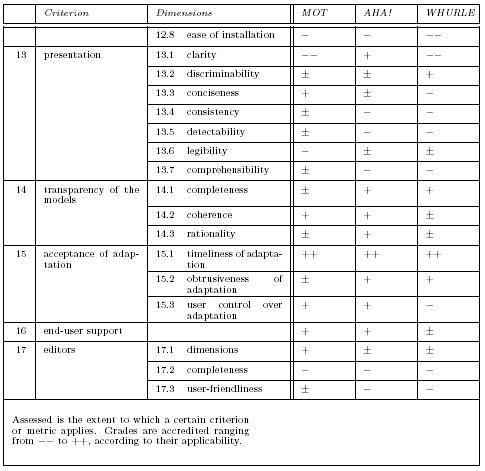

This report describes a research on the authoring of Adaptive Educational Hypermedia. The main research goal is to develop a generic evaluation framework, which makes it possible to assess AEH authoring applications. The framework is based on the concerns of the three different stakeholders involved in AEH authoring, which lead to three groups of evaluation criteria: technological, educational/conceptual and end-user related. Another group of results is derived from tests on MOT, AHA! and WHURLE; three AEH systems developed within the scope of the EU Minerva/ADAPT project. The evaluation framework is the result of a literature study on AEH research, quality factors and existing evaluation models. During the development process, the framework has been tested on MOT, AHA! and WHURLE. The genetic frameworkfor evaluating AEH authoring systems presented in this report, is both valuable and useful. However, the model is developed using only one test course, once on each system, by one researcher. Because of this, some criteria in the framework are, to a certain extent, considered questionable. These questionable criteria, and the single nature of the testings, are reasons for further research on the framework. MOT, AHA! and WHURLE proved to be suitable for authoring adaptive course materials. They belong to the front line of new educational software, an area already shifting rapidly from a supportive to an intelligent character. The tests show that AHA! is somewhat in front of the other two systems on certain points, and WHURLE somewhat beyond. Reasons are the longer lifespan of the AHA! system and WHURLE only offering a presentation function, and no proper module for course development. All in all, considering the fact that the three systems are still under development; MOT, AHA! and WHURLE will be 'good' AEH authoring systems eventually. The basic conceptual structures are solid and thought-out. However, the developers have to reconsider and perhaps extent the adaptive features, improve the general possibilities, such as the editors, and work on the user-friendliness of the systems.

No one will disagree that graduation time is about one of the strangest time spans of a man's live, I think. Well, what made mine so out of the ordinary? Is it the fact that I'm graduating at the university, while almost every Bit student graduates at a company? Or the fact that this is my second assignment, as the first one went up in smoke? Perhaps it's the fact that I had no first supervisor for the first three months. Or could it be the fact that I lingered in Enschede for so long now which makes it special? It could well be the fact that I, as a Bit student, had to deal with supervisors so diverse that it sometimes seemed impossible to unite all their concerns and comments, while at the same time our meetings were really interesting and at times even funny. Either way, I had a great time during the last months of my study. I accidentally came across the assignment on AEH authoring systems and the group of Behavioural Sciences one day and from the first moment on, Rik and I went on really well. The assignment attracted me very much, partly because of the enthusiasm Rik displayed. The first few months, when meetings with Rik and Italo were the order of the day, were mostly filled with installing and testing MOT, AHA! and WHURLE. I went to Eindhoven to meet with the developers of the systems. Together with Rik and Italo I went to the AH 2004 conference, also in Eindhoven, which were some remarkable days. Two of the fellow researchers (Alexandra from MOT and Craig from WHURLE) even came to Enschede one day to discuss the Minerva/ADAPT project and help me with installing some new test software.

Eventually, I also started working on my report and Bedir was of great assistance to keep things going here. After Tanya joined the project, she more than double made up for the time she was not around. The whole graduation project sped up and half a year later I am now presenting this report. Thanks go out to Alexandra for commenting on my work and to Craig, David and Natasha for assisting me with the software. I would like to thank Rik and Italo for letting me work with them on this assignment. It really was some experience to get to know these people, and not only because of the different views they displayed in comparison with the background of my own study. I would like to thank Bedir for his keen eye and structured approach all through the project, it certainly contributed to the overall quality of the research. I would like to thank Tanya for the way she guided me through the jungle a graduation process can sometimes be. Most of the time, I felt we were on the same track and our meetings were always fruitful.

Language issues in English were not a thing to worry about for me, as Grainne was really wonderful in assisting me with this. I'm very grateful to her for correcting my English writing the way she did. Writing this report in English was a good exercise for me personally, just as the fact that it is my first report ever edited completely in LATEX. Special thanks go to all the people around me, as my supervisors at the university definitely weren't the only ones suffering from the ups and downs during my graduation. The time has come to say thanks to you: my friends, my roommates, my family and to Judith, for being there for me when I needed you all, even when I wasn't there myself.

Niels Primus, March 2005

1.1 Problem statement

According to Cristea [22, 36, 27], Devedzic [43], Brusilovsky [15] and many others [50, 39, 7, 44, 64, 65] the question is not whether or not adaptivity in educational hypermedia is necessary, but in what form it should be added. In order to be able to answer this question, several researches have started. One of them is the Minerva/ADAPT project [35], funded by the European Union. Within the scope of this project, systems like MOT are developed to create a test environment for AEH authoring systems, so new techniques can be integrated and tested in a real life setting. This report stresses the importance of continuous research in the field of AEH and discusses several evaluations of AEH systems to ultimately offer recommendations to the community of researchers involved in the field. Devedzic [43] gives an analysis of the key issues in next-generation web based education. He refers to problems like the need for sharing and reuse of material, the proliferation of standards for communicating and the ability of end-users (teachers) to deal with ICT as the key challenges for the field. Others, like Cristea and Garzotto [36], accentuate the soundness of the design being the most important factor in AEH authoring.

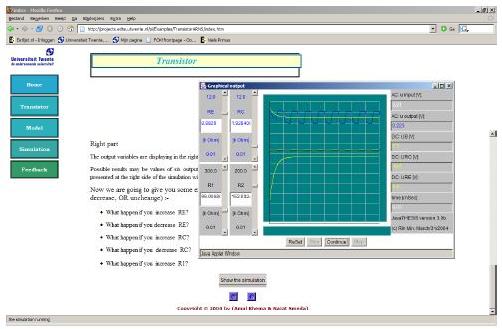

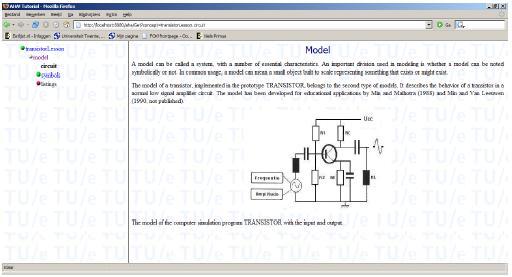

The University of Twente participates in the already mentioned EU Min erva/ADAPT project through the research of the Faculty of Behavioural Sciences [57, 58]. The aim of the project is to evaluate the systems MOT, AHA! and WHURLE by building a multimedia (six dimensional) test course on a transistor circuit including an intelligent simulation. The goal is to find out what the performance of the generated products, built in MOT, AHA! and WHURLE, is. Two other goals are to find out how the characteristics of the test products match those of the original transistor product (that was built in HTML, contained a Java transistor applet and had no adaptive features) and how they match the characteristics specified by the three systems. They also want to investigate how building blocks -- discrete audio-visual components and standard elements from libraries -- can enrich open learning environments and make them more efficient and effective for all learning styles. Their project should finally generate some good and bad practices (and techniques), some quality factors for courseware authoring (and methods) and practice for adaptation over pedagogical considerations as well as learners cognitive profiles (learning or learner styles). Learner styles are of old little embroiled in research on AEH authoring ap plications. The main reason for this is that most current systems are developed by IT researchers and not by people with an educational background. The integration of learner styles in AEH systems is necessary in order to create real educational adaptive hypermedia. Examples of learner styles are the fielddependent and the field-independent style [72]. The first describes learners who prefer structures, social contents and materials related to their own experience. Field-independent learners perceive analytically, make concept distinctions and prefer impersonal orientation. Kolb [51] defines learner styles by introducing two axes: task (preferring to do or watch) and emotion (think or feel). Learner styles are established according to the position along the axes. Possibilities are activist (accommodator, do and feel: concrete-active); diverger (watch and do: concrete-reflective); theorist (assimilator, watch and think: abstract-reflective) and pragmatist (converger thinking and doing: abstract-active). In order to carry out their evaluation research, the group of Behavioural Sciences has planned to develop two test or demonstration courses. One is the fully adaptive six-dimensional transistor circuit course and the other one is a lighter, stripped version of this. One of the original research objectives of the ADAPT project is to build a prototype adaptive authoring tool. Another goal concerns evaluation and will look at the prototype from as many view angles as possible. With the prototype tool, the Twente members of the project were supposed to do their share of evaluation, being a combined product/process evaluation. However, instead of one adaptive prototype system, the project lead to several of such systems; namely MOT, AHA! and WHURLE. This report describes the research that filled the gap formed by the new challenge of having to evaluate several systems instead of one generic AEH authoring application. Instead of evaluating one generic application for authoring AEH, a generic evaluation framework is constructed so any AEH authoring system can be evaluated. As such, the study described in this report is embedded in the Twente sub project. It contributes to prototyping the three test examples in MOT, AHA! and WHURLE and evaluating them afterwards. The test products are valuable for the Faculty of Behavioural Sciences, because they can be used directly for evaluation and for studying the conceptual structure of the course material. This research also assists in the conclusions and recommendations the Twente report makes and supports them. Recapitulating, the main problem this report focuses on is the lack of evaluation frameworks for Adaptive Educational Hypermedia authoring applications.

1.2 Research Objectives

As already mentioned in paragraph 1.1 and described in Appendix A, the Minerva/ADAPT [35] project strives towards a "European platform of standards (guidelines, techniques and tools) for user modeling-based adaptability and adaptation". It hopes to reach this goal by generating new tools for authoring AEH, or Intelligent Tutoring Systems (ITS) as it sometimes is referred to. Three of these systems for authoring AEH are used in the research described in this report, they are MOT, AHA! and WHURLE. One of the ways to contribute to the development of standards is by designing an evaluation framework for AEH authoring applications. One of the objectives for the Twente sub project is a working multimedia (six-dimensional) transistor test lesson. Evaluating AEH is difficult because the underlying theories are either new or still under development, and there is no widespread agreement as to how the fundamental tasks (student modeling, adaptive pedagogical decision making, generation of instructional dialogues, etc.) should be performed [60]. The goal of this research is: To design a generic evaluation framework in order to assess AEH authoring applications. In AEH authoring applications, there are several stakeholders, all of which have different (sometimes overlapping) concerns. The generic nature of the framework is guaranteed by including the concerns of all stakeholders. The three different groups of stakeholders are in the educational or conceptual area (problem domain), in the technological area (solution domain) and in the end-user domain. The division in three domains is fixed: there is no superfluous domain and no domain is missing. In order to answer the concerns of the stakeholders, criteria have to be formulated. These criteria are to be found in literature on the subjects most closely related to the field of AEH authoring; literature on AEH, on software quality, on existing evaluation frameworks for (educational) AH, on other methods, and so on. The research goal results in the following research question: What are the criteria by which AEH authoring applications can be evaluated?

1.3 Approach

In order to be able to answer the main research question seven research subquestions are formulated. Every question is necessary for a small part of the research. Together, they are sufficient to gather enough information to answer the main question.

The research commences with a literature study on both the current status of AEH technology and the technological, educational and end-user criteria for evaluating AEH authoring systems in order to set up a preliminary evaluation framework. The aforementioned, combined with a review of literature on software quality and existing evaluation frameworks, discusses the first four research subquestions. The preliminary evaluation model, based on related work, is then updated, taking into account the shortcomings of the original evaluation model. The criteria that are selected for the framework have to be both necessary and sufficient, a selection method has to be formulated for this purpose. The result is a final and generic evaluation framework, applied using the transistor circuit course on MOT, AHA! and WHURLE resulting in recommendations in the field of AEH research. This covers research subquestion 7.

The research approach in figure 1.1 shows how the different parts of the research are interconnected. As already stated, there is no standard or agreed evaluation framework for measuring the value and the effectiveness of adaptation yielded by AEH [69] The preliminary evaluation model for AEH authoring systems, partly coming forth of the literature study, is updated during several test cases on MOT, AHA! and WHURLE, using test course on transistors. As a result there is not only a generic model for the evaluation of AEH authoring systems, but there are evaluation results of the three systems as well. The generic nature of the final evaluation framework is guaranteed by the fact that it is based on the concerns of all stakeholders. The literature study, which gives a view on the complete field of AEH authoring, the existing evaluation frameworks and their shortcomings and the tests on the different experimental systems provide the basis for the criteria. Because this research is a practice-oriented design research [73], it is important that the problem is properly identified and defined. This has already been handled in the preceding sections. A possible pitfall in this kind of research is a lack of awareness of the origination of the main problem and the connections it has with other problem areas. In order to tackle this obstacle, the literature study forms an essential part of the research. It generates an exploration in the field of research and describes the backgrounds of the different areas. The research objective is described in the right form and contents. The form is: "Realising an assessment of the adaptivity in AEH authoring applications, by creating an evaluation framework" The rightness of the contents is established by the fulfillment of the four criteria usefulness, feasibility, distinctness and informativeness. The use is made clear in section 1.1. On the one hand this research presents a literature study and an evaluation tool, a valuable contribution to the field. On the other hand, this research assists in evaluating the three test systems of the Minerva project. As already stated, a possible danger is that the project grows too large. In order to remain feasible, clear borders must be set, as described in this chapter. The goal of the project is very specific, namely developing an evaluation framework. This chapter clearly describes the knowledge necessary to obtain results, making an informative research goal.

1.4 Structure of the report

The first four research subquestions concern the theoretical background of this research. A view on the status of current AEH technology, including a description of the three Minerva/ADAPT systems (MOT, AHA! and WHURLE) is presented in the next chapter. This includes a description of the issues in these research areas. The third chapter describes literature on software quality, several existing frameworks for AEH evaluation systems and other background on the evaluation of AEH authoring systems. The fourth chapter presents the criteria for evaluating AEH authoring systems, resulting from the literature study. A method for selecting the criteria is also described. A generic evaluation framework is presented. It is based on the shortcomings of the existing ones. The evaluation model is also developed by means of applying it to MOT, AHA! and WHURLE. These tests are discussed in chapter five. Finally conclusions are drawn and recommendations on the field of AEH are given in chapter six.

2.1 Authoring Adaptive Educational Hypermedia

The research on Adaptive Educational Hypermedia (AEH) has two pillars: adaptive hypermedia and educational systems. Adaptive hypermedia are applications best thought of as websites that automatically personalise themselves to users. A website is, in fact, an example of a hypermedia system. Educational systems are software applications specifically written for learning purposes. A few years ago, both educational and (adaptive) hypermedia software were presented on CD-ROM. The internet embraced these systems and they adapted itself to the new challenges and possibilities offered. The rise of the WWW also caused the research on AEH to grow explosively. According to Cristea and Garzotto [36] it is obvious that both adaptive hypermedia and educational systems have certain advantages over other systems. Traditionally, educational systems are often focused on course management and collaborative issues, however, they have to do without the personalisation needed for individual learning. The main feature of adaptive hypermedia that discerns it from normal hypermedia is the ability to serve each user individually. It performs a process of what is called 'adaptation', in most cases on the basis of user models. The combination of the two systems described forms the basis for the success of AEH applications, now and in the future [15]. There are mainly two types of users in any AEH application. The first one is the student, on him the research in this report is not applicable. The second type of user is the teacher or course designer: the author of the course. Authoring in this context means organising course materials and creating the intelligence that ensures the adaptiveness of the application.

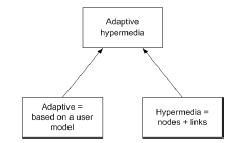

2.1.1 Adaptive hypermedia

Adaptive hypermedia (AH) is a relatively new direction of research on the crossroads of hypermedia (formerly known as hypertext) and user modeling [15]. A hypermedia system consists of information items such as documents or animations connected to each other by means of so-called hyperlinks. These links between content items (called nodes) are an essential part of the hypermedia concept.

Most of the existing hypermedia are built in a certain way and presented that way to all different kinds of users. It is obvious that different users have different interests, different browsing styles and so on. So the more users who use a certain hypermedia system, the more need there is to make different 'views' for certain (groups of) users. The ideal situation would be to serve each user with content items and links specially prepared for him. This implies that the contents and the links available on the server should be prepared in such a way that differentiation is made possible and preferably kept simple. Of course, the latter only applies when it is useful to have a dedicated presentation for each user. A corporate website, for instance, is often initially a global information point for every user, either an individual or a company. Only when more information is requested, would such a site would offer specialised contents. The process of serving each user personalised contents in hypermedia systems is referred to as adaptation [8]. An adaptive system is a system capable of adapting itself to each user. It can create and update user models, in order to keep abreast of each user's progress. An adaptable system is tunable by the user itself. Most new AEH systems are both adaptable and adaptive. Initially, the user can set up some options himself. In a later stage, however, the system provides the adaptation automatically.

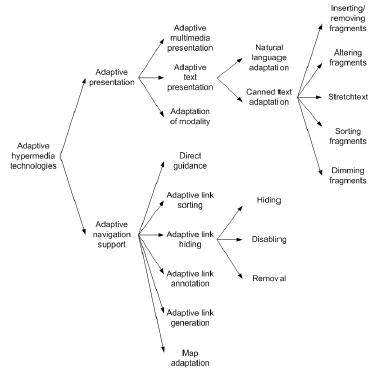

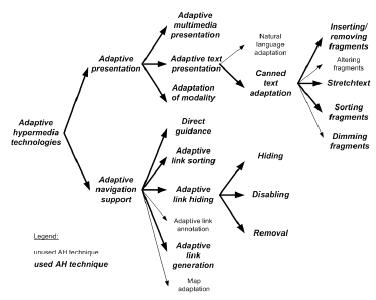

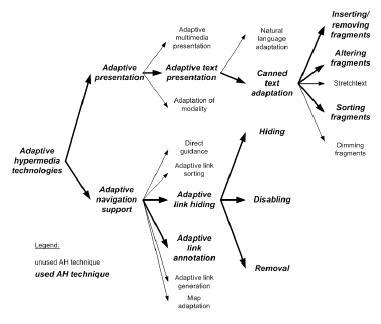

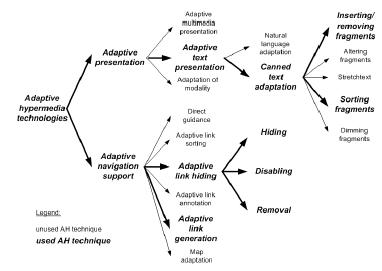

Brusilovsky [15] presents an extensive view of the taxonomy of adaptive hypermedia technologies in his landmark work Adaptive Hypermedia. The two main ways to create adaptivity are through adaptive navigation support and adaptive presentation. The methods and techniques giving shape to these technologies are first mentioned in his earlier work [14], which also gives an explanation of most of them. Figure 2.2 shows the taxonomy of adaptive hypermedia technologies as defined by Brusilovsky.

Adaptive presentation is the first class of adaptation. On the basis of a user model, which is based on the user characteristics and his progression in the course, the contents of the page presented to the user alter. This can be done in various ways. Contents, or fragments of contents, can be conditionally included or removed, their order can be changed, a multimedia item can be added, a piece of text can be highlighted, dimmed or stretched out when hovering over it; all according to the state the user model is in. The purpose is to challenge qualified users by presenting more (profound) information and not stress beginners with knowledge that goes beyond their scope. Instead, beginning students, whose knowledge level on a subject does not comply to a certain standard, are presented more information. On the same time, experienced users are not both ered by a lot of detailed information they already possess. Natural language adaptation is more or less the same as canned text presentation, it uses some of the same techniques. On the moment of writing, the technique could not be classified further. Adaptation of modality is a high-level content adaptation technology that allows the creator of the courseware to choose between different types of media to present materials. In addition to traditional text, now also videos, applets or speech can be used to present information to the user. Adaptive navigation support is guiding the user through the course materials in a personalised way, which can even be changed during the course. In practice, this means links to other parts of the course can disappear or even be removed, or, quite the reverse, emerge. The order of the navigational links can be changed or the user can be guided through without even seeing, let alone clicking, a link. All of this adaptation is done according to the information gathered and processed in the user model. An example would be a course of which the outline is shown on a website and where the different parts become conditionally clickable, and thus viewable, as soon as the student finishes a preceding chapter. The most simple form of adaptive navigation support is direct guidance, which decides for the user the next 'best' node (piece of information). Adaptive link annotation is augmenting a link with some form of comment, that gives information on where the link points to. This can, for instance, be done by placing icons next to a link. Global and local maps can be altered to give the user an idea of his course domain, this alteration is called map adaptation. Generating new links splits up in three categories: discovering new useful links between documents and adding them permanently to the set of existing links; generating links for similarity-based navigation between items; and dynamic recommendation of relevant links. A possible danger in this process is the change of creating endless lists that ruin the view of the user. Since the start of AH research, educational hypermedia has been one of the largest areas of interest in the field of AH. Together with on-line information systems, they account for about two thirds of the research efforts in adaptive hypermedia [15]. Other areas in AH research include on-line help systems, information retrieval hypermedia (internet browsers and search engines), institutional hypermedia and systems for managing personalised views in information spaces.

2.1.2 Educational systems

The main purpose of any educational (computer) system has always been and will always be to support or even replace the classical human tutor [36]. Until recently, most educational systems could only offer supportive or collaborative tasks, like courseware management, calenders and email support. Examples of these systems are WebCT, Blackboard and TeleToP. As stated in chapter 1, the challenge for teachers lies in bridging the gap between the static domain of the course materials and the dynamical world of the student. The teacher has to fulfil the needs of each student individually, taking into account, among other things, the cognitive capabilities, the knowledge levels and the personality of the student. Any educational system in the future should deal with this challenge as well. (Footnote here: On-line information systems are, e. g., electronic encyclopedia, eCommerce systems and handheld guides)

The educational software world has a very clear understanding of the need for component-based programming in their field [68, 52]. Components are essential for moving towards a set of sharable and reusable course concepts. On the one hand, domain experts (teachers) can easily put together different building blocks of lesson material according to pedagogical strategies, thus avoiding technological issues and all the possible problems and difficulties. On the other hand, the technological experts do not have to bother about what material or what learning plan to use, because the component-based architecture makes these issues transparent for them. Educational software is different from other software in the sense that the educational world through the teachers hardly ever voluntarily introduce technology in their business. Most of the times IT implementation in schools is a top down management requirement. A side effect is that designers of educational (authoring) software have difficulties developing new applications, as they do not receive full support from the people they are designing for.

2.1.3 Adaptive educational hypermedia

As stated earlier, the question is not whether adaptivity should be added to educational systems, but in what form. Possible issues which arise with this question are what kind of adaptation should be applied, to what should it respond and when and how should it be introduced. In analogy with Cristea [22], who came up with the issues just mentioned, this report will get around the 'problem' of applying the adaptation by embracing the solution of authoring [27, 17, 16]. This shifts the pedagogical power to the people who should have it: the teachers and course creators. Thus, developing authoring tools for AEH is the main issue in (educational) AH at this time. One of the most important developments in AEH research has been the move from static to web-based hypermedia [15]. The internet provides both a challenging and an attractive platform for researchers to develop new AEH systems. One of the reasons for this is the infinitely larger set of resources the internet offers compared with a stand alone application. Furthermore, the internet is very famous for its struggle for standards. In order to create communication between systems and to render course materials reusable and shareable, the research community has at one point to agree upon certain standards.

2.2 MOT, AHA! and WHURLE

In this section the three systems for AEH authoring resulting from the research in the Minerva/ADAPT project are described. These are MOT, AHA! and WHURLE. Each system is given a brief introduction, after which the theory behind the system is explained. Brusilovsky's taxonomy mentioned in section 2.1.1 will be used to describe the adaptation techniques used in each of the systems.

2.2.1 MOT

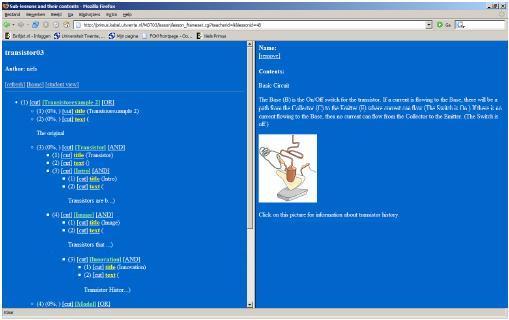

MOT [23, 25, 31, 32] is an authoring system for adaptive educational hypermedia developed at the Eindhoven University of Technology. MOT descends from MyEnglishTeacher, or MyET, "a web-based, agent-based, long distance teaching environment for academic English" developed in 2000 in Japan by Cristea and others [39]. Since the start of the Minerva/ADAPT project, there have been many new versions of MOT, starting with MyET. One of the most important aspects the developers of MOT are trying to establish is the separation of content items (course materials) and adaptation rules within the system [38]. This guarantees the most efficient reuse of course materials and enables the application of AH adaptation rules. The basics of MOT MOT is based on the LAOS model [34], a 5-layer adaptive authoring model for adaptive hypermedia and LAG [29, 41], a 3-layer adaptation model. Both these models have been partly developed by the same research group that developed MOT [38]. The LAOS model introduces granularity in authoring levels, which supports the separation of information items and adaptation rules. This is necessary for the presentation of alternative contents. The LAG model provides granularity in adaptation rules and is integrated in the Adaptation Layer. MOT functions on the basis of concepts and lessons; information is stored in concepts, lessons can point to one or more concepts to publish this information. A concept can be seen as a book, whereas a lesson is a presentation of one or more books, or of parts of a book.

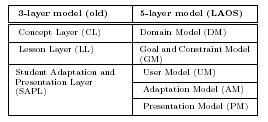

LAOS. The idea behind LAOS, Layered Adaptive hypermedia authoring model and its algebraic Operators, is to create a more flexible and powerful system for presenting information to different users and to let authors create more adaptive lessons, in a more adaptive environment [34]. Originally created as a 3-layer model [27], LAOS eventually became the 5-layer model of table 2.1. The main idea is to group the elements according to their possible usage, for later reuse [26].

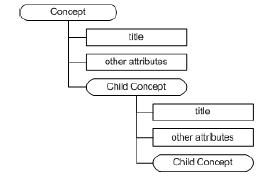

The Domain Model (DM) originates from the Conceptual Layer (CL), containing both atomic and composite concepts. This first layer is where the actual course information is stored. As shown in figure 2.3, a concept can contain child concepts. Each (child-) concept contains at least one attribute called title and it can contain more attributes, like text, an introduction or self-defined attributes. Within these attributes it is possible to store HTML. This allows authors to add just plain text to a content item, but also tables, images, videos, applets, and so on.

The hierarchical structure of concepts is implemented by means of a separate concept hierarchy entity, relating a super-concept to one or more sub-concepts. The relationship of concepts is based on commonalities between concept attributes.

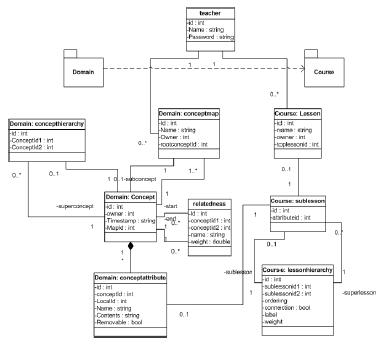

The Lesson Layer (LL) was replaced by the Goal and constraint Model (GM). The intermediate authoring step of adding the GM is necessary to build 'good' presentations; goals to give a focused presentation and constraints to limit the search space. In MOT, the goals and constraints are given by lesson constructions. A lesson contains sub-lessons which, in turn, are lessons, hence creating a hierarchical structure of lessons. A lesson attribute contains one or more concept attributes. This is the link with the concept domain. The idea is that the lesson combines pieces of information that are stored in the concept attributes in a suitable way for presentation to a student. One of the lesson attributes contains a holder which contains the actual sub-lessons in a specified order.

The Student Adaptation and Presentation Layer (SAPL) is extended to a User Model (UM), an Adaptation Model (AM) and a Presentation Model (PM). The UM is designed in conformity with the DM and the GM and thus contains concepts and relationships between concepts. In MOT, the user model does not necessarily need to be an overlay of the domain model. The reason being that other relationships between variables in the user model can exist next to just those between the concepts matching those of the domain model. The adaptation model is implemented through the the LAG model, discussed in the next section. The presentation model must take into account the physical proprieties and the environment of the presentation and provide the bridge to the actual code generation for the different platforms.

In the ideal situation, all the layers in the old 3-layer as well as in the LAOS model should be supported by the Adaptive Engine (AE). The higher the degree of support offered by the AE, the more adaptivity there can be applied. As stated, the LAOS model is used to separate the levels of specification. To support this, MOT functions on basis of a MySQL database, whose table separation scheme instantiates the logical separation of the different levels in the LAOS model. On the contrary, the XML language does not provide the solid data representation a database offers. However, XML is good for representing hierarchical data, without many cross-relations. Because of this, and because MOT aims at offering course materials to other AEH systems, the actual output for the adaptation engine is in HTML format. In the specific case MOT transfers course materials to AHA!, XML is generated.

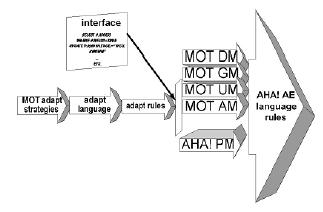

LAG. The functionality of the adaptation model was improved by integrating

a 3-layer model into LAOS, called LAG (three Layers of Adaptation Granularity) [38, 29]. The idea of the three layers is that the medium and the high level function as a wrapper for respectively the low and the medium level; it presents the lower level functions in a more simple way to the layer above. The lowest level contains direct adaptation rules, like IF-THEN statements or condition-action (CA) rules. The medium level consists of an adaptation language; this is a kind of programming language which, after compilation, outputs a set of adaptation rules.

The highest level deals with adaptation

strategies; these are a sort of function calls for the adaptation language and can represent pedagogical or cognitive styles.

As MOT is meant to be a system for the (adaptive) authoring of adaptive

educational hypermedia systems, the presentation layer (PM) is not really worked out. There is a Student View, but this really is nothing more than a fixed listing of all the concepts, where all adaptation already has been applied. The idea behind MOT is to present a user-friendly authoring system for creating lessons, which can be transferred to other adaptive educational (delivery) systems such as AHA! or WHURLE.

Figure 2.4 shows the integration of LAG in LAOS, LAOS in MOT and MOT

in AHA!. The latter is one the latest results in the ADAPT project: the coupling between MOT and other systems.

In the class diagram of MOT (Figure 2.5) we see the splitting of concepts (Domain) and lessons (Course). The most interesting link is the connection between concept attribute and sub-lesson. Each attribute is used in one sub lesson and every sub lesson uses zero or one attributes for its contents.

Adaptation techniques in MOT

The adaptive hypermedia methods and techniques present in MOT [25] (see figure 2.6) can be found in Brusilovsky's taxonomy as described in section 2.1.1. The methods and techniques are either present or not, there is no way to partially implement a certain technique. If a certain method or technique is present in MOT, it is printed in bold face in the taxonomy. They have been identified in cooperation with the developers of the system.

2.2.2 AHA!

The AHA! system, or "Adaptive Hypermedia Architecture", was designed and implemented at the Eindhoven University of Technology, and sponsored by the NLnet Foundation through the AHA! project, or Adaptive Hypermedia for All (Footnote here: The information in this section is mainly derived from [6] and [11]). AHA! is an open source general purpose adaptive hypermedia system, through which very different adaptive applications can be created. AHA! was originally developed by De Bra and others in 1996/1997 to support an on-line course with some user guidance through conditional (extra) explanations and conditional link hiding.

Adding adaptivity in AHA! is normally achieved by creating concepts and concept relationships. This is the way best supported by AHA!'s authoring tools. Adaptivity can, however, be added through other means, by low-level and/or advanced features. An example of a low-level authoring tool is the Concept Editor, which makes it possible for an author to add adaptation rules by hand.

The Concept Editor and other authoring tools are discussed later on in this section.

The basics of AHA!

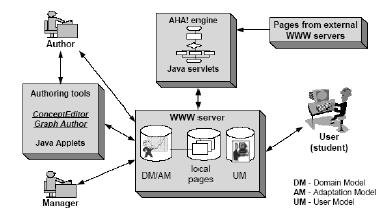

For the most part AHA! works as a web server. Users request pages by clicking on links in a browser. AHA! delivers the pages that correspond to these links. However, in order to generate these pages AHA! uses three types of information: the domain model, the user model and the adaptation model.

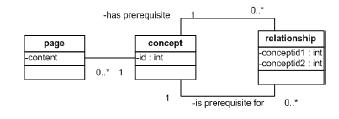

The domain model (DM) contains a conceptual description of the applications contents. It consists of concepts and concept relationships. Concepts can be used to represent topics of the application domain, for instance, subjects to be studied in a course. In AHA!, every page that can be presented to the end-user must have a corresponding concept. A concept though, does not necessarily need a corresponding page. Concepts are linked to each other by relationships; one concept can, for instance, be a prerequisite for another concept. In AHA!, prerequisite relationships, which are predefined, result in changes in the presentation of hypertext link anchors. Every concept, except for the uppermost parent concept, has a parent concept. All concepts can contain child concepts and can have siblings.

The adaptivity in AHA! is based on concepts stored in the User Model (UM). The UM is updated each time a user visits a page, which is related to a concept. A concept contains several attributes (and attribute values) such as, for example, a knowledge level indicator. These attributes (their values) can be updated at a visit and then be propagated to attributes of other concepts. In this way, more, or other, information (stored in other concepts) becomes available. The UM is an overlay model, which means that for every concept in the DM there is a concept in the UM. Besides this, the UM can contain additional concepts that have no meaning in the DM.

The UM always contains an adaptable concept called personal, which contains attributes describing the user. Each user can personally adapt the values of the attributes stored in the personal concept. Obvious attributes are login information and addresses, more interesting attributes are, for instance, knowledge level indicators of a certain research topic.

The adaptation model (AM) is what drives the adaptation engine. It defines how user actions are translated into user model updates and into the generation of an adapted presentation of a requested page. The AM consists of adaptation rules that are actually event-condition-action rules. Figure 2.8 shows the architecture of AHA! and the three models. As can be seen, Java servlets interact with the combined domain/adaptation model and with the user model, in order to carry out the adaptation rules that perform the updates of the user model.

Whenever a concept (or a concept corresponding to a page) is requested, AHA! starts by executing the rules associated with the access attribute (Footnote here: The access attribute is a system-defined attribute that is used specifically for the purpose of starting the rule execution. ). After this, all the other rules are executed and the attributes of the associated concepts are updated accordingly. Now AHA! starts processing the requested page. This involves the conditionally including of fragments or objects, the hiding or annotation of links and, of course, passing all other contents to the browser. The result is a unique page, suited for a single user.

The AHA! system uses either XML files or a MySQL database to store the domain, the adaptation and all the user models. Since AHA! is based on TomCat servlet technology, it should, in theory, be possible to use it with any Java-enabled platform, on any Java-based (TomCat) web server. As said before, AHA! offers several authoring tools for adding adaptation to selected contents.

The Concept Editor is a graphical, Java applet based tool to define concepts and adaptation rules. It uses an (author defined) template to associate a predefined set of attributes and adaptation rules with each newly created concept. It is a low-level tool in the sense that all adaptation rules between concepts must be defined by the author. Many applications have a number of constructs that appear frequently, e. g., the knowledge propagation from page to section to chapter, or the existence of prerequisite relationships. This leads to a lot of repetitive work for the author.

The Graph Editor is also a graphical, Java applet based tool, but it uses high-level concept relationships. Again, when concepts are created, a set of attributes and adaptation rules is generated. But this tool also has templates for different types of concept relationships (also defined by the author). Creating knowledge propagation, prerequisite relationships or any other relationship is just a matter of drawing a graph structure using this graphical tool. The translation from high-level constructs to the low-level adaptation rules is done automatically, based on the templates.

The Form Editor let authors create forms, which are used for changeable attributes to be included in an (X)HTML presentation. The end-user is then able to adapt the values of these attributes through the form accompanying a certain application.

Furthermore, there is a module available for adding multiple choice tests to an application. This lets the system automatically select questions and answers to present. The Layout Manager provides authors with the possibility to change the look & feel of a course.

Instead of creating AHA!-specific tools it is possible to let authors develop applications for other adaptive hypermedia systems and translate them to AHA!. Given the fact that AHA! offers a lot of (low-level) functionality this should be possible for many systems.

Adaptation techniques in AHA!

The adaptive hypermedia methods and techniques present in AHA! (see figure 2.9) can be found in Brusilovsky's taxonomy as described in section 2.1.1. As with the taxonomy of those used in MOT, they are boldfaced. All the methods and techniques that are used are pointed out and explained by the developers in literatur provided by them [6].

2.2.3 WHURLE

WHURLE [Footnote 3] (Web-based Hierarchical Universal Reactive Learning Environment) [61] is designed around 2001 at the University of Nottingham to provide a discipline-independent framework that manages easily reusable contents. WHURLE is pedagogically flexible and has the capability of implementing adaptation. An important feature of this framework is that it distinguishes between three types of authors of educational hypermedia subject experts, teachers and technical authors. The subject experts are responsible for the contents, the teachers for the implementation of that contents and the technical authors for the user model of the adaptation and the appearance and behaviour of the system.

The basics of WHURLE

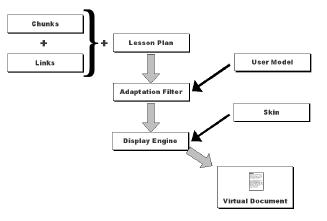

The student is presented a lesson, consisting of several atomic units called chunks, which are the smallest possible conceptually self-contained units of information that can be used by the system. This can be a text paragraph, a picture, a simulation or even a complete book (which would, of course, not correspond to the purpose of a chunk). Besides chunks, lessons contain a lesson plan, which is a default pathway through the chunks. The lesson plan is filtered by the adaptation filter that implements the user model based upon data stored in the student's user profile.

Figure 2.10 shows the modular system architecture of the WHURLE framework. A composite node tree is constructed from all of the specified chunks, the links and the lesson plan. This is then processed by the adaptation filter (an XSLT stylesheet) which implements the user model. The output document is then processed by the display engine (another XSLT stylesheet) which overlays the skin (the cosmetic appearance) and generates the auto navigation system. The output document of the display engine is the virtual document served to the user. It consists of dynamic HTML and should, therefore, be capable of being displayed in most web browsers.

This architecture allows the adaptation filter, display engine and skin to be modified independently of each other a facility invaluable both for research and implementation, as now these aspects can be studied and implemented separately. Thus, WHURLE is not tied to any particular user model or interface, both of which can be created by technical authors.

WHURLE contains three distinct linking systems, serving distinct purposes. The first category are the intra-chunk links (i. e., links within individual chunks) that are created by the original chunk author and form a part of the chunk. An example would be a chunk containing a question along with a link to another part of the chunk or even to another chunk containing the answer. Secondly, systematic links are automatically generated by the system to provide navigational facilities, based upon the structure of the lesson plan, and the user interface of the learning environment, based upon definitions in the skin. Finally, authored links are manually created by teachers or students and are stored in one or more link bases, separate from the contents. They can point to any available resource; to another chunk in WHURLE or to anything on the web. WHURLE is an XML system currently implemented using XSLT that is processed on the server, delivering dynamic HTML. In order to minimise the processing overhead XInclude is used to retrieve chunks as they are required. XSLT and XInclude are processed using the Cocoon XML publishing framework. The user profiles are stored in a MySQL database, chunks and lesson plans are XML files and configuration and navigation information is specified as request parameters of the URI.

Adaptation techniques in WHURLE

The adaptive hypermedia methods and techniques present in WHURLE (see figure 2.11) can be found in Brusilovsky's taxonomy as described in section 2.1.1. The developers of WHURLE describe the methods and techniques they use for adaptation in several publication [12, 62].

Footnote 4: The information in this section is mainly derived from [13, 12, 62] and [71]

2.2.4 Summary

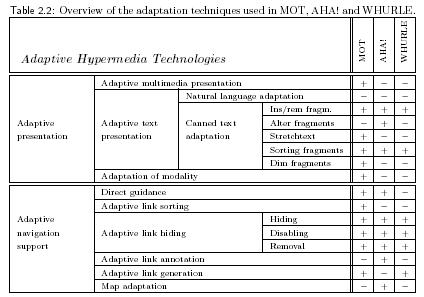

Having discussed the three systems MOT, AHA! and WHURLE, this section continues with an overview of the adaptation methods and techniques used within the three systems. Table 2.2 shows the adaptation techniques as defined by Brusilovsky (see section 2.1.1). MOT and AHA! together cover almost all the adaptation techniques, WHURLE functions in this sense as a sort of reference system. When analysing these systems, no consideration is given to the fact that systems might use each others contents and techniques. These aspects of interoperability and such are treated in the following chapter, here only aspects typical to the systems itself are analysed. Brusilovsky's 2001 taxonomy of adaptation techniques [15] covers all possible ways to express adaptivity in an application for adaptive (educational) hypermedia. Every possible method or technique used in either MOT, AHA! or WHURLE fits in the formulated classification. Not every adaptive technique has to be used in an AEH system to be a good system and having implemented all techniques does not lead to being the 'best' system. It just ensures a more complete system, as it is able to present the same contents and the same level of adaptation in more ways. Many of the techniques mentioned are simply instances of the same 'mother' technique. Natural language adaptation, for instance, is just the same as canned text adaptation, except for the fact that the information chunks are spoken instead of written. It should not cost that much effort to implement spoken language in an existing AEH application, as the adaptation rules are already defined and the only issue is to update the links that lead to the correct chunks; they can now also lead to other chunks. As can be seen in table 2.2, the techniques most commonly used are inserting, removing and sorting of fragments and adaptive link hiding. The first three are presentation techniques; bits and pieces of text, visuals, sound fragments and such are presented to the user according to the state of, among other things, the user model and the adaptation model. They can be in a different order for different students, there can be extra information shown, in case a student's knowledge level does not match a certain limit or contents can be removed from the presentation completely, if a student is already familiar with the information. In the same way the hiding, disabling and removal of links to other content items, examples of adaptive navigation support, takes place. If, for instance, the system concludes that a student's knowledge or his abilities do not comply with a certain level, it presents links to parts of the course materials that can help the student to improve his knowledge. The techniques discussed in the last paragraph are the most simple, or standard, adaptation techniques. This is the reason for the fact most developers use them as basic techniques of their adaptive system. One could argue it is almost impossible to develop an AEH application without incorporating these techniques. There are certain adaptation techniques that are not often used, they make systems like MOT, AHA! and WHURLE unique. The usage, or just absence, of certain techniques could say something on the 'quality' of a system. However, statements made in this chapter are only preliminary. The real evaluation is discussed in later chapters. Adaptive multimedia presentation is a technique only adopted by MOT, through which teachers are enabled to include multimedia objects in their lessons, instead of just text. A premature statement would be that AHA! and WHURLE are more complete, offering the possibility of using more dimensions. The same applies to natural language adaptation. As stated before, neither one of the systems uses this technique. The absence of alteration and dimming of fragments, just like stretchtext, do not seem to be major limitations to systems which fail to incorporate them. The reason being that each system offers a lot of other adaptation techniques to present course materials. Adaptation of modality, however, a feature only adopted by MOT, allows the teacher to let the system choose between different dimensions of information chunks to present a concept. For some students, a picture tells more than a thousand words, while for other students this just might not be the case. This could be an issue on which both AHA! and WHURLE are up for improvement.

The fact that WHURLE does not use direct guidance as a technique to lead students through the lessons, could be a drawback. Just like adaptive link sorting, which seems to be a very good way to express the importance of certain course materials. Exclusively AHA! uses adaptive link annotation and map adaptation, two forms of adaptive navigation support. The first one seems to be just another way to do the same trick, so then it would do no harm to ignore it. Usually however, it is thought better to give the student as many signals as possible, to ease the process. In this sense, it would be a gain to annotate the usefulness of links with, for instance, (colored) icons. Map adaptation gives the impression of a quite valuable method to give the student some idea of his position in the course. Dynamic, or adaptive link generation is at first sight a rather useful method to expand the collection of links, so it could well be an advantage for AHA!.

2.3 Remarks on AEH authoring

This chapter has so far given a view on the research on authoring systems for adaptive educational hypermedia. It has also described the three systems MOT, AHA! and WHURLE, which form the offspring of the research carried out within the Minerva/ADAPT project. This section presents the issues that result from the research on AEH authoring systems. As stated before, according to Devedzic [43] there are conceptual, technological and tools-related issues. On a conceptual level, course materials created in an AEH system should be made reusable and should be available to other AEH systems. The problem is that most such systems today use different formats, languages and vocabularies for representing and storing the course materials, as well as the teaching strategies they apply, the assessment procedures and the student models. Hence there is generally no way for two different educational applications to interoperate and share their teaching and learning contents, even if they are in the same domain. An issue of technological order is the slow acceptance of new technologies. XML/RDF languages, for instance, provide a more-or-less standardised syntax, offer a high degree of interoperability between applications and are widely used. The educational world, though, still mainly uses HTML to create educational hypermedia, HTML does not have the functionalities mentioned. The third order of issues Deved zi´ c signals is about the incapability of teachers to deal with the tools provided. It is difficult for authors who are not experts in web page design to create pages of a web-based educational application by using current authoring tools, such as TopClass, WebCT or Authorware. Known problems in the field of adaptive hypermedia are how to adapt, when to adapt, what to adapt, to whom to adapt and what kind of adaptation to apply. Furthermore, AH researchers complain about having no challenging contents and structures large enough for the field. The educational software world, on the other hand, always felt the lack of personalisation in the existing educational systems. Another issue in this field is the match between educational components and virtual concepts. An issue in the field of AEH research has always been the question whether or not to make a system suitable for the internet. Garzotto and Cristea [36] state the main issue of AEH lies in the design. The proper design, they claim, is the first step towards common design patterns, leading to a better, semantically enhanced authoring system for AEH. This is necessary in order to overcome the obstacle between the educational specialist (the teacher or course developer) and his often limited knowledge of the environment and hence difficulties to deliver his knowledge.

In chapter 4 the issues described here are translated into criteria for evaluating AEH authoring systems.

This chapter approaches the quality question from two sides. First of all is described what software quality is, as an AEH system is, of course, a piece of software. At the same time software quality aspects which can be used to evaluate AEH applications are marked, so they can be taken along to the evaluation criteria described in chapter 4. The second section describes existing frameworks for the evaluation of (educational) adaptive hypermedia systems. This section mentions some other evaluation methods as well, which also provide issues in AEH authoring that can be turned into criteria later on. A distinction has to be made between the assessment and the evaluation of adaptive educational hypermedia [47]. Evaluation is done from a system point of view and focuses on system performance and the systems decision-making capabilities. Evaluating means checking the adaptive decisions made by the system, the efficiency and performance of the system and/or the algorithms the system employs. The term assessment refers to a learner centered approach to system evaluation. The effectiveness of the system is measured by looking at learner outcomes. In both evaluation and assessment, the primary goal is to determine the effectiveness of the adaptive educational hypermedia system. As stated earlier, this research describes an evaluation on design aspects in particular. Learner aspects are not taken along. When a user is mentioned, a teacher, or course developer, is meant. Where chapter 2 describes the methods and techniques used to introduce adaptivity in AEH authoring systems, this chapter will chart the underlying technologies and conceptual ideas that enable this adaptivity. The adaptation techniques as described by Brusilovsky [15] are the reflection of what is done internally by the system, the user takes no notice of these internal processes and only sees the effects through the adaptation techniques used (Footnote here: The user in this research is the teacher or the course developer, though it could apply to students as well ).

These methods and techniques for adaptive navigation and adaptive presentation support are all in the user domain. They can be seen as the front-office. This in contrary to the the issues and criteria mentioned in this chapter, which are in the architectural domain and in analogy best regarded as the back-office of the system. As already mentioned in chapter 1, there is a need for empirical evaluations of adaptive educational hypermedia systems. The reason for this is that only few of such studies exist. Moreover, the ones that exist are of a rather simple nature and have a small sample size [74]. The former is covered in this research by trying to define a full range of criteria, to cover the concerns of all stakeholders. The latter is not, as that would go beyond the scope of this research, i. e., it would exceed the research parameters. However, evaluations were carried out on MOT, AHA! and WHURLE. The limitation of these evaluations arise from the fact that the evaluations are not carried out on a large number of test persons. All of this is explained and worked out in chapter 5. Another form of evaluation is formal testing which, for instance, focuses on the correctness of algorithms. This kind of research is not used in this study. This chapter presents the basis on which the final framework is built. The reason for selecting the software quality and existing frameworks for AH evaluation as foundation has multiple sides. First, there are no evaluation models for AEH authoring applications. The existing literature on this subjects only sums up some issues to consider. To deal with this situation, other perspectives are selected, on the basis of relatedness to the subject. In this respect, software quality and AH systems in general are the most closely related subjects. One could argue educational systems have to be studied to a larger extent than has been done in chapter 2, but the research described here focuses on design aspects, and not on pure educational aspects, such as learner styles.

3.1 Software quality factors

Regarding software engineering, Pressman [67] wrote a landmark work in which he defines software quality as the conformance to the three measurable aspects below. The nature of his work makes this information most valuable. 1. Software requirements; the foundation to measure quality. 2. Specified standards; resulting in a set of development criteria. 3. Implicit requirements; such as maintainability. Although these three aspects cover the whole range of software quality, the concept remains rather vague. This section makes the definition of software quality more concrete by identifying several factors that affect software quality. These factors can be measured, either directly or indirectly, and are, as such, useful for the development of an evaluation framework. The factors are derived from the three categories mentioned above, together they are a decomposition of the three aspects. As they are selected by Pressman [67] in his landmark work on software engineering, these factors are considered valuable.

3.1.1 McCall's quality factors

McCall and his colleagues [54] define three categories of factors that affect software quality: operational characteristics, the ability to undergo change and the adaptability to new environments. These three categories each contain several quality factors, which are described below.

reliability The extent to which a program can be expected to perform its intended function with required precision.

efficiency The amount of computing resources and code required by a program to perform its function.

integrity The extent to which access to software or data by unauthorised persons can be controlled.

usability The effort required to learn, operate, prepare input and interpret output of a program.

maintainability The effort required to locate and fix an error in a program.

flexibility The effort required to modify an operational program.

testability The effort required to test a program to ensure that it performs its intended function.

portability The effort required to transfer the program from one hardware and/or software system environment to another.

reusability The extent to which a program (or parts of a program) can be reused in other applications -related to the packaging and scope of the function that the program performs.

interoperability The effort required to link one system to another.

| audibility | error tolerance | operability |

| accuracy | execution efficiency | security |

| communication commonality | expandability | self-documentation |

| completeness | generality | simplicity |

| conciseness | hardware independence | software system independence |

| consistency | instrumentation | traceability |

| data commonality | modularity | training |

When adopting the model described above, each metric is given a weight, varying between 0 (low) and 10 (high). On the basis of these measurements, evaluation is performed. This scale is obviously arbitrary as well as dependent on local products and concerns.

3.1.2 Hewlett-Packard's quality factors

The Hewlett-Packard company [48] listed some quality factors in a set called FURPS, which is an acronym for the factors it contains.

| Quality factor | Metrics |

| Functionality | feature set, capabilities of the system, generality of the delivered functions, security of the overall system |

| Usability | human factors, overall aesthetics, consistency, documentation |

| Reliability | frequency and severity of failure, accuracy of output results, mean time between failures (MTBF), ability to recover from failure, predictability of the program |

| Performance | processing speed, response time, resource consumption, throughput, efficiency |

| Supportability | maintainability (extensibility, adaptability and serviceability), testability, compatibility, configurability, ease of installation, ease of problem localisation |

The ease of installation metric in particular is interesting, as it does not occur often in evaluation lists though it has been a factor in the research described in this report.

The models in this section are distinct from the existing evaluation frameworks discussed in the following section. A framework extends over several points of view, whereas these models, which contain software quality factors, only cover pure software related aspects. These are separated from other evaluation methods and quality issues on purpose, as they directly descend from the concept of software quality, introduced at the beginning of this section.

3.2 Existing evaluation frameworks

On the evaluation of regular adaptive hypermedia systems, little research has been carried out. Several frameworks were constructed and some of the most recent ones are discussed here. As they are not specifically designed to evaluate educational AH systems, not all quality aspects can be used in this research. The two evaluation frameworks for the evaluation of adaptive educational hypermedia first mentioned in this section both follow a layered approach, which splits the system under evaluation in to critical parts, i. e., it separates the input acquisition from the adaptation decision process. Furthermore, both are of empirical nature. As already stated, this is the kind of research currently most needed. Another reason for selecting these two frameworks is the recent nature of them. They both present an up-to-date view on the evaluation of (educational) adaptive hypermedia.

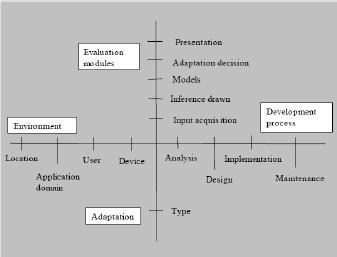

3.2.1 The framework of Gupta & Grover

Gupta and Grover present a framework for evaluating AH systems [49] in which they elaborate on the layered approach of AH evaluation. According to them, a layered framework for evaluation offers the best identification of adaptation failures and other errors. The framework they suggest consists of four dimensions -environment, adaptation, development process and evaluation modules. These four dimensions are orthogonal to each other, i. e., all the evaluation modules should address all the components of environment and adaptation during each phase of the development process. Figure 3.1 shows the framework as suggested by Gupta and Grover.

Not all the criteria formulated by Gupta & Grover are used in this research. The following is a short description of the subjects which are used in the research.

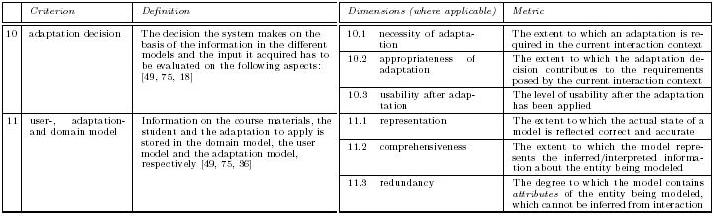

The environment dimension handles on everything the system has to adapt itself to, like location, device, and so on. As this research is about evaluating educational AH systems in a research environment, these characteristics are stable and do not need to be taken into consideration. The adaptation dimension only has two possible types: static and dynamic adaptation, depending upon the time and process of adaptation. Static adaptations are specified by the author at the moment of design or determined only once at the startup of the application. Dynamic adaptation occurs during runtime depending on various factors such as inputs given by the users during use, changes in user model, adaptation decision taken by AHS etc. An example of a dynamical adaptation system is AHA!. The evaluation modules dimension comes from the research on layered frameworks as proposed by [18] and [66]. Gupta and Grover evaluate them with respect to the other dimensions of the framework. In this layer, the input acquisition has to be checked for correctness, i. e., reliability, accuracy, precision, etc. Along with this, an evaluation has to be done on the interpretations the system makes of the inputs given, for both static and dynamic adaptations. On the basis of this input, semantic conclusions are made by the adaptive system, the so called inferences drawn. These have to be evaluated. Based on the inferences drawn, each system uses several models, such as domain, user , adaptation and presentation model. These models are necessary for achieving the required adaptation. They are supposed to imitate the real world. They need to be evaluated for validity, i. e., correct representation of the entity being modeled, comprehensiveness of the model, redundancy of the model, precision of the model and sensitivity of the modeling process. Evaluation of the adaptation decision is done to check whether the system, given a set of properties in the user model, follows the most optimal adaptation, when there is more than one adaptation possible. Criteria used are necessity, appropriateness and acceptance of adaptation. The evaluator should check that more adaptivity does not decrease usability. The presentation is evaluated on basis of criteria such as completeness, coherence, timeliness of adaptation and user control over adaptation. The development process dimension takes care of evaluating the software life cycle, i. e., analysis, design, implementation and maintenance. Because the three systems described in this report already exist, this dimension can be transformed to a check between the initial and the achieved goals of the systems, in respect to the other three dimensions.

The main objective of Gupta & Grover when developing their framework was to present a method to evaluate an AEH authoring application in its complete context. This means the application is not only observed on its adaptive capabilities, but that the evaluation framework integrates the AH development process, the accessing environment, the different levels and types of adaptations involved and the evaluation modules of layered frameworks. Of course, not all these aspects are taken into account in the research described in this report.

3.2.2 The framework of Weibelzahl

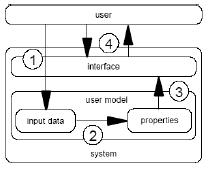

Weibelzahl [75, 74] constructed a framework for the evaluation of adaptive systems in his Ph.D. dissertation. He elaborated on the state of affairs in evaluation frameworks for adaptive systems (not necessarily of educational order) and embroiled the work of about forty researches in developing his own framework. In doing so, he took into account the layered approach of adaptive systems evaluation, first described by Brusilovsky, Karagiannidis and Sampson [18]. The evaluation framework of Weibelzahl is claimed to be 'more complete' than frameworks developed before. It distinguishes, for instance, input data assessment vs. inference of user properties, a relevant distinction that should not be overlooked. In developing the framework, Weibelzahl had two objectives in mind. First, he observed the need for specifying what has to be evaluated to guarantee the success of adaptive systems. The second objective he pursued was to have a grid that facilitates the specification of criteria and methods that are useful for the evaluation. The framework focuses on empirical evaluations and not on formal methods, such as verification of algorithms. Weibelzahl states that when evaluating the real world value of an adaptive system an empirical approach is inevitable. Summarising, he proposes "a systematic approach for the evaluation of adaptive systems that will encourage and categorise future evaluations".

The framework, displayed in figure 3.2, distinguishes four evaluation steps, which can all together be seen as an instance of the layered approach of [18], as each step is a prerequisite to the following steps:

Figure 3.2 shows the architecture of an adaptive system in combination with the information flows between the components of the systems. The numbered bullets refer to the four evaluation steps mentioned before. The layered nature of the framework follows from the fact that the evaluation of all previous steps is prerequisite to the current step. For instance, the system probably makes the wrong adaptation decision if it is arguing on the basis of incorrect user information. The four evaluation steps are described below.

To build a user model the system acquires direct or indirect input from the user (e. g., appearance of specific behaviour, utterances, answers, etc.). These data are the basis of all further inferences. Thus, its reliability and validity are of high importance. This applies to external validity as well. For instance, in adaptive learning systems, visited pages are usually treated as read and sometimes even as known. However, users might have scanned the page only shortly for specific information, without paying attention to other parts of the page. Relying on such input data might also cause maladaptations. Based on the input, properties of the user are inferred. The inference itself is derived in many different ways ranging from simple rule based algorithms to Bayesian Networks or Cased-Based Reasoning systems. Similar to the first step the validity of the inference can be evaluated, too. In fact, this means to check whether the inferred user properties really do exist. During the so called downward inference, the system decides how to adapt the interface. Usually there are several possibilities of adaptation given the same user properties. The aim of this evaluation step is to figure out whether the chosen adaptation decision is the optimal one, given that the user properties have been inferred correctly. The last step evaluates the total interaction by observing the system behaviour and the user behaviour, i. e.,, the usability and the performance. Several dimensions of the system behaviour may be evaluated. The most important is probably the frequency of adaptation. Moreover, the frequency of certain adaptation types is important, too. The user's behaviour can be evaluated separately and is, in fact, the most important part. The adaptation is successful only if the user has reached his goal and if he is satisfied with the interaction. This final step has to assess both task success (respectively performance) and usability. For some systems the performance of the users in terms of efficiency and effectiveness is crucial. For example, the success of an adaptive learning system depends on the users learning gain (besides other criteria). The evaluation framework developed by Weibelzahl [75] appears to fit effortlessly into the framework of Gupta & Grover [49]. The four steps Weibelzahl mentions roughly match the evaluation modules described by Gupta & Grover. However, Weibelzahl stresses the importance of a really thorough investigation on evaluation criteria. His profound research [74] gives a comprehensive view on existing evaluation methods and criteria. The results of that study are included in this research.

3.2.3 Other evaluation methods

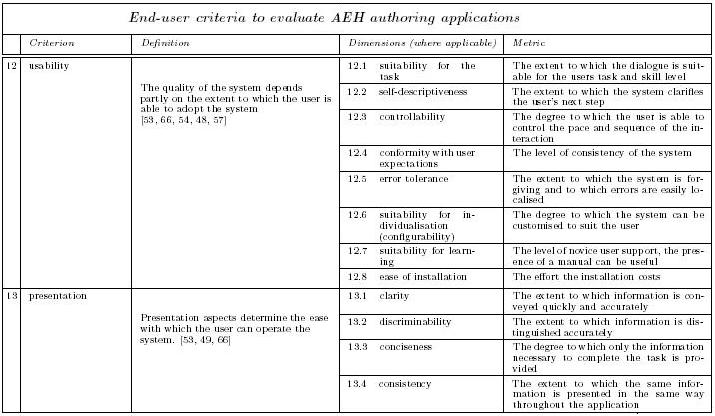

The two frameworks described before both follow the layered approach as developed by Karagiannidis et. al. [18]. Another way of investigating AEH authoring applications is by comparing them with each other. An example of this feature-by-feature comparison, as it is referred to, is described in [43]. Typical features to study in this kind of evaluation are, e. g., adaptivity in organising the course contents, personalised curriculum sequencing and adaptive link annotation. Cristea and Garzotto describe a taxonomy that contains key dimensions for the design of AEH applications that guide teachers and course developers through the design process [36]. They stress the importance of a sound design. Important issues are the design of the content domain (concept types and their relations), instructional view (the learner specific path through the application), detection mechanism and adaptationand user model. This model does not offer evaluation criteria as such, it nevertheless is a kind of evaluation tool in itself. The different parts of the model are extremely suited to analyse an AEH system, they fail, however, to be useful as a quality factor. An AEH system that complies closely to the model, can be regarded as a good system. On the contrary, if a system fails to match the majority of the design dimensions, it can, to a certain extent, be considered an inferior system. In other words, the design aspects mentioned are essential for any AEH authoring application. Kravcik and others carried out a quantitative and qualitative evaluation on AEH systems [53] and propose issues such as error tolerance, personalisation, simplicity and consistency. The issues they bring up are directly derived from ISO standards, so these standards are used to deliver the criteria for the evaluation framework. The evaluation issues useful for the evaluation framework are derived from ISO 9241, parts 10 and 12. As stated before there was of old little attention from the educational world for the development of AEH systems, however, this is changing of late. Min and De Diana [59] developed a questionnaire to assess AEH authoring systems. As they are part of the Minerva/Adapt project, the focus is on design aspects. The questionnaire forms a combination of analysis (dimensions, models, learning styles) and evaluation (editors for the different dimensions, completeness, user-friendliness). The questionnaire provides some valuable aspects that are to be included in the framework.

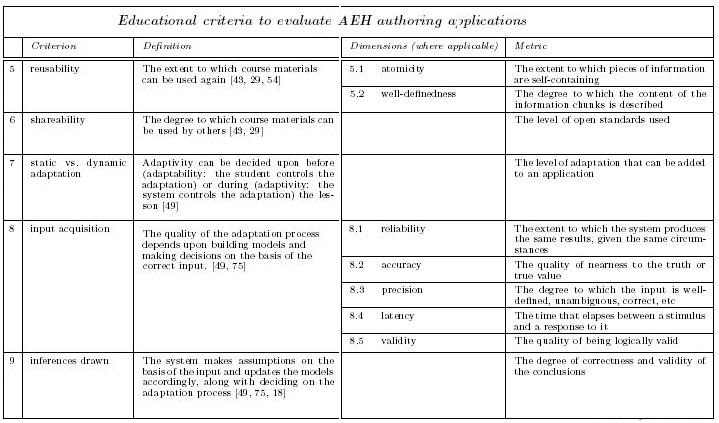

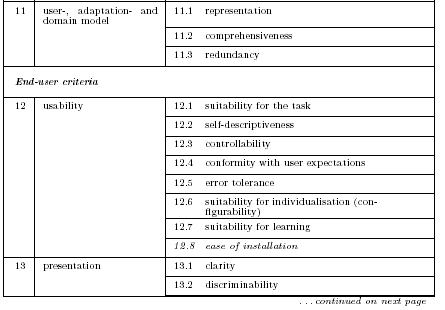

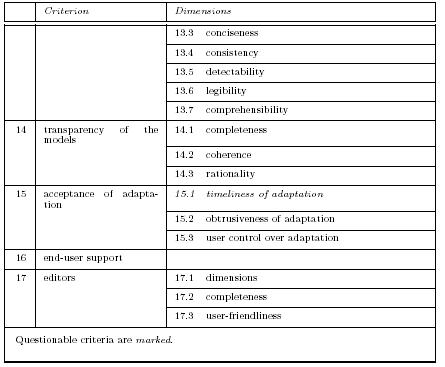

4.1 Criteria to evaluate AEH authoring applications

In order to present a framework for evaluating AEH authoring systems, this section presents several criteria on the basis of which aspects such as, amongst others, performance and effectiveness can be measured. The criteria are divided in three subsections, according to the group of aspects they influence; the subsections are technological, educational and end-user related. The group of technological issues involves aspects on languages (such as HTML and XML/RDF) and interoperability between systems. The subsection on educational criteria is a rather broad one, it includes everything from conceptual structures to standardised meta data languages. The group of tools-related or end-user issues covers everything related to the teacher or author and his capability to perform tasks in a certain AEH environment.All the criteria extracted from literature, issues, other frameworks or methods are presented in the following section, without separation on origin or citation. Adaptation techniques (see section 2.1.1), such as the hiding or dimming of fragments of text, are the effect of the adaptation process carried out by the system. They are referred to as the part of the system that is detectable by students, the so called 'front-office'. Adaptation techniques can be traced back by looking at aspects in the educational/conceptual domain, the adaptation decision in particular.

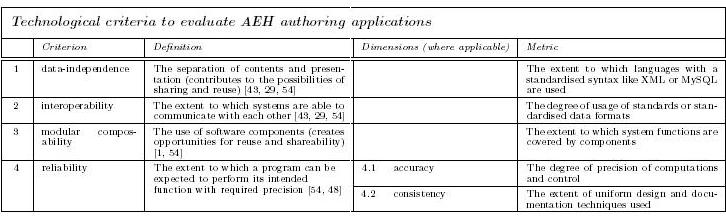

4.1.1 Technological criteria to evaluate AEH

This category of criteria includes aspects of adaptive educational hypermedia applications that are concerned with the technology of the systems. The technological aspects, together with the ones mentioned in the following two sections, form a part of the 'back-office' of AEH systems and are not to be confused with the adaptation techniques, described in section 2.1.1. The criteria in this domain assess the technological aspects that support the educational (conceptual) processes.